Facial Emotion Analysis - Human vs Machine

Analysing someone's emotions is something that we as humans do both unintentionally and instinctively on a daily basis, and we are able to use the information that we interpret from said emotions almost instantly. We use this information to play to our own ethics and morals, either to be supportive or malicious toward others. In contrast, however, quite understandably, computers are not as equally adept when it comes to an understanding human emotion and also rely on instructions to proceed with the information gained - and this is where deep learning comes into play. Deep learning is a significant element within the field of Computer Science, for which it aims to simulate the human brain and how it retrieves and gains information - embedding these features into a computer. Deep learning has various applications, ranging from self-driving vehicles to recognising objects within an image, and is a way of automating many aspects of our daily lives, such as smart assistants being capable of giving you the latest news and updating your shopping list. The psychology of the human brain is very unpredictable, as no two humans behave the same. With every single individual being different from the next - consisting of varied mannerisms, behaviour, age and appearance - these factors pose a greater challenge towards helping machines understand how we feel.

When comparing the two media, it is very evident that humans are superior in terms of perceiving emotions, as we are essentially already “programmed” with the natural instincts to do so. We are aware of social cues, reactions and general facial expressions, as well as stereotypes and conformity. In the eyes of computers, however, these are initially meaningless.

Teaching a computer to perceive emotions is certainly a difficult task - as there are many factors to consider. We all consider a “neutral” face to be one which has no emotion, but can a face actually have no emotion? People of different ethnicities, ages, and overall appearances may have completely different “neutral” faces, with some perhaps looking more joyful or disheartened.

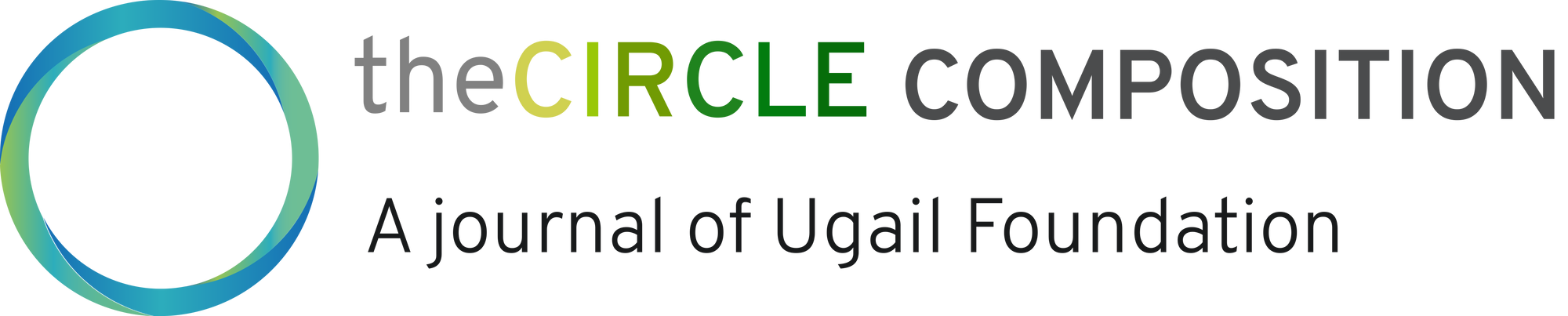

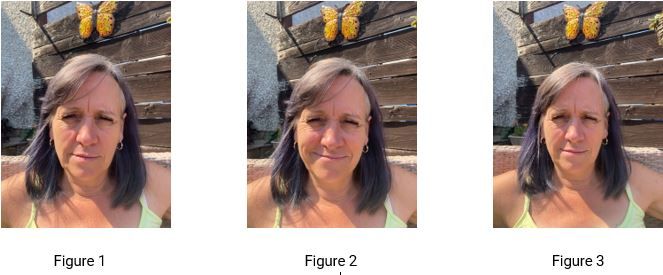

Look at these images - what emotions can you interpret from them, and which expression would you consider the “neutral” face?

If you hesitate to decide, that’s not an abnormality - many could consider the neutral face as any other expression, such as perhaps a “concerned” face or even a “disapproving” face. The fact is, everyone perceives emotions differently - which in itself implies that tasking an inanimate object - incapable of conveying emotions itself - with analysing these emotions is certainly challenging.

To allow a machine to grasp a basic understanding of emotion, it must be trained to first detect them through multiple inputs such as the connotations of emotion (whether it’s a negative or positive emotion); the intensity of the emotion; and possibly even be trained via thermal imagery - which could be used to more easily identify emotions in situations of stress; when your body may rise in temperature and emit more heat.

Techniques such as these are already being used throughout the Internet of Things, such as within smart assistants - being trained to detect when someone is feeling emotionally distressed, with companies such as Amazon, which have been performing ER research for several years.

Alternatively, these methods could be used to help practitioners to perform medical diagnoses, such as sampling behavioural patterns and emotions from an individual and comparing for any possible symptoms of a mental illness or condition such as autism or anxiety. Having a machine perform this task rather than a human does have both drawbacks and advantages, such as the fact that the machine does not have the interpersonal experience for as much of an accurate verdict. However, it can also provide a psychologist with a second opinion - or possibly an insight into what they may have missed.

In conclusion, while we as humans can observe and interpret emotions incredibly well, teaching machines to do the same will ultimately be beneficial to us, potentially leading to newfound advancements in medicine, as well as potentially saving lives.

References

Helping computers perceive human emotions, Rob Matheson, MIT News

https://news.mit.edu/2018/helping-computers-perceive-human-emotions-0724

Personalized “deep learning” equips robots for autism therapy, Becky Ham, MIT News

https://news.mit.edu/2018/personalized-deep-learning-equips-robots-autism-therapy-0627

How do humans perceive emotion?, LI Wen, Department of Psychology and Program of Neuroscience, Florida State University

https://res-www.zte.com.cn/mediares/magazine/publication/com_en/article/2017S2/LI_Wen.pdf

Multimodal and Multi-view Models for Emotion Recognition, Gustavo Aguilar, Viktor Rozgic, Weiran Wang, Chao Wang, University of Houston, Amazon.com

https://arxiv.org/pdf/1906.10198.pdf

Can computers feel?, Amelie Schreiber

https://towardsdatascience.com/can-computers-feel-69b234eeff70

Author Biography

Alfie Reeves was born in the year 2004 - in the “city that doesn’t exist”, otherwise known as Bielefeld, Germany, and is now living by the popular east coast in England. An academically minded person, Alfie has always had a determination for academic success - studying Mathematics, Further Mathematics, Physics and Computer Science at A-Level - and is keen on pursuing a research career in Computer Science and Mathematics. Alfie also considers himself to be musically inclined - having an adoration for music, whether it be as a listener or a performer.

Cite this article as:

Alfie Reeves, Facial Emotion Analysis - Human vs Machine, theCircle Composition, Volume 3, (2022). https://theCircleComposition.org/facial-emotion-analysis-human-vs-machine/