How is Artificial Intelligence used to Recognise Facial Emotions?

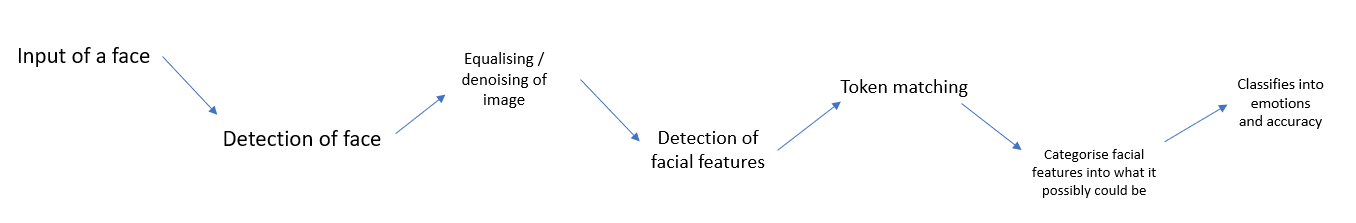

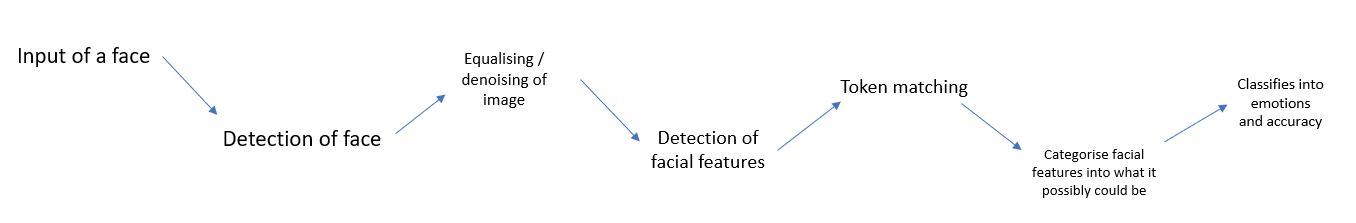

Looking at how AI recognizes facial emotion, there are a few main steps involved, as listed below.

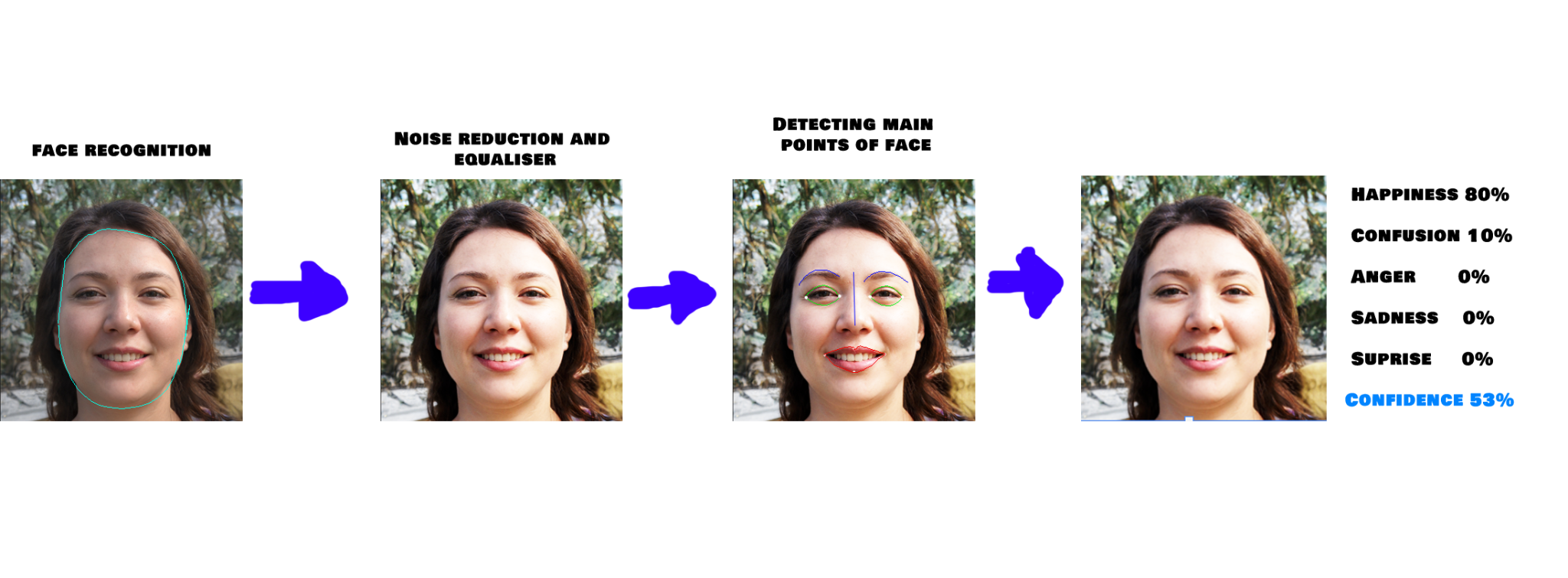

Starting from detecting the face, the AI would equalise the contrast and denoise any other issues with the photo, which makes it more readable and easier to see. Then it marks the important facial features and their placements, looking at the shape of the features. This is followed by turning that information into several forms of data through tokens which are compared to the previous libraries it may have used to learn what emotion it may possibly be as well as how certain it is. Then showing emotions most likely, such as happiness, sadness, anger etc., as well as a confidence rating. A good example of this is Google vision where you can test out an image of a person, and it returns the likeliness of what emotion they may have.

Good as a human?

When talking to a person, you may not realise, but you are subconsciously reading a lot of information based on a few details. A large amount of human communication comes from two main aspects, which are verbal communication, such as speech, and non-verbal such as body language and facial expression. A large focus of this article is on the recognition of facial expressions, especially with its applications for artificial intelligence, meaning it can be very influential. For example, as recent as this year (2022), there have been tests used to detect depression in people. A start to this is by looking at how AI may recognize human expression.

An increase in the development of computer vision and machine learning help make facial expression recognition more accurate. This is partially due to the large increase in the market for emotion detecting software going from 21.6 billion dollars to 56 billion which is more than double, and the demand for higher quality and better facial recognition shows it is not stopping.

How it works

Using an optical sensor such as a webcam or smartphone, it is possible to detect a human face in real time or as a pre-saved video/photo where a computer algorithm identifies a human face before processing, firstly. It carries out an “equalising of histogram”, which just means the algorithm increases the contrast of the photo or video, and the algorithm may decrease any other further noise in the image. Furthermore, the algorithm tries to detect the parts of the face, from facial features to skin tone to movement in the face. Then using this data, it gathers “clues” to what a person might be feeling, which it stores as a token. Trained from previous images, it uses the information from previous training to categorise what it might show. Once presented with a fully processed image, the AI uses the information it learns from the previous tests and training given and will reveal the answers to the analysis of what it likely is. Those of which are broken down into several generic emotions, such as the ones seen in this image, as well as possibly other information to see how accurate it is.

What can it do?

Overall. For AI to be able to detect facial emotions, it has to go through several processes as well as previous training to understand what the image may show. Unlike humans, a computer cannot easily identify unique details about a person’s facial features, so it looks at generic details which can, although have small differences they can, identify a lot of patterns that even a human may not easily recognise, and so this is incredibly useful for detecting something like depression - as it may not always be obvious, someone is suffering from this horrible disorder, not even close family and friends. Therefore, slight changes in patterns in even the face may help with coming to the conclusion of what it could be.

References

Facial Expression Recognition using Neural Network – An Overview

https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.683.3991&rep=rep1&type=pdf

Emotion AI: How Technology Takes A Human Face

Test out / how it break downs into emotions

Author Biography

Muhammad Khan is a 17 year old currently residing in Sheffield, England. With a natural curiosity for the world and everything in it, he likes to learn, no matter how random or trivial the detail is. Muhammad has aspirations to do environmental science as well learning more about the natural world. Besides that, he is a standard nerd with interests in yu-gi-oh and all things computers. Whether it be programming Arduino projects, building keyboards and mice or even video editing and graphic designing. Muhammad also likes doing rock climbing and hiking/nature walks while getting distracted by different plants and animals.

Cite this article as:

Muhammad Khan, How is Artificial Intelligence used to Recognise Facial Emotions?, theCircle Composition, Volume 3, (2022). https://theCircleComposition.org/how-is-artificial-intelligence-used-to-recognise-facial-emotions/